|

SnapGen: Taming High-Resolution Text-to-Image Models for Mobile Devices with Efficient Architectures and Training

|

|

Wonderland: Navigating 3D Scenes from a Single Image

|

|

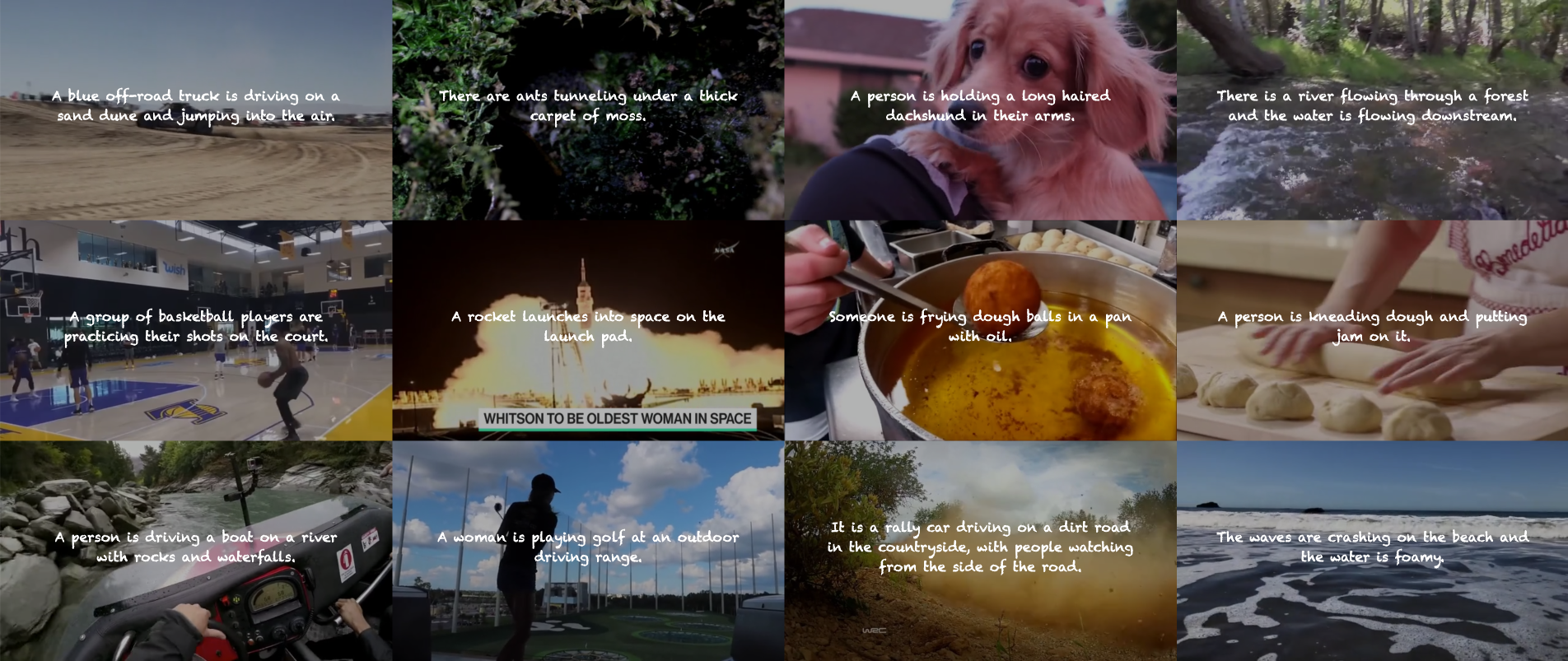

SnapGen-V: Generating a Five-Second Video within Five Seconds on a Mobile Device

|

|

Lightweight Predictive 3D Gaussian Splats

|

|

AsCAN: Asymmetric Convolution-Attention Networks for Efficient Recognition and Generation

|

|

SF-V: Single Forward Video Generation Model

|

|

BitsFusion: 1.99 bits Weight Quantization of Diffusion Model

|

|

Efficient Training with Denoised Neural Weights

|

|

E2GAN: Efficient Training of Efficient GANs for Image-to-Image Translation

|

|

TextCraftor: Your Text Encoder Can be Image Quality Controller

|

|

Snap Video: Scaled Spatiotemporal Transformers for Text-to-Video Synthesis

|

|

Panda-70M: Captioning 70M Videos with Multiple Cross-Modality Teachers

|

|

SPAD: Spatially Aware Multiview Diffusers

|

|

HyperHuman: Hyper-Realistic Human Generation with Latent Structural Diffusion

|

|

Magic123: One Image to High-Quality 3D Object Generation Using Both 2D and 3D Diffusion Priors

|

|

SnapFusion: Text-to-Image Diffusion Model on Mobile Devices within Two Seconds

|

|

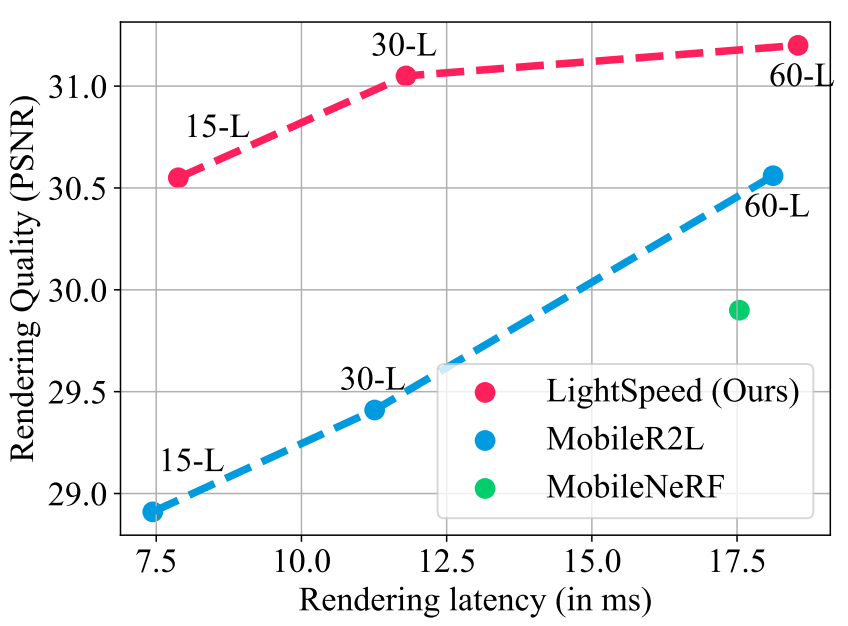

LightSpeed: Light and Fast Neural Light Fields on Mobile Devices

|

|

iNVS: Repurposing Diffusion Inpainters for Novel View Synthesis

|

|

Rethinking Vision Transformers for

MobileNet Size and Speed

|

|

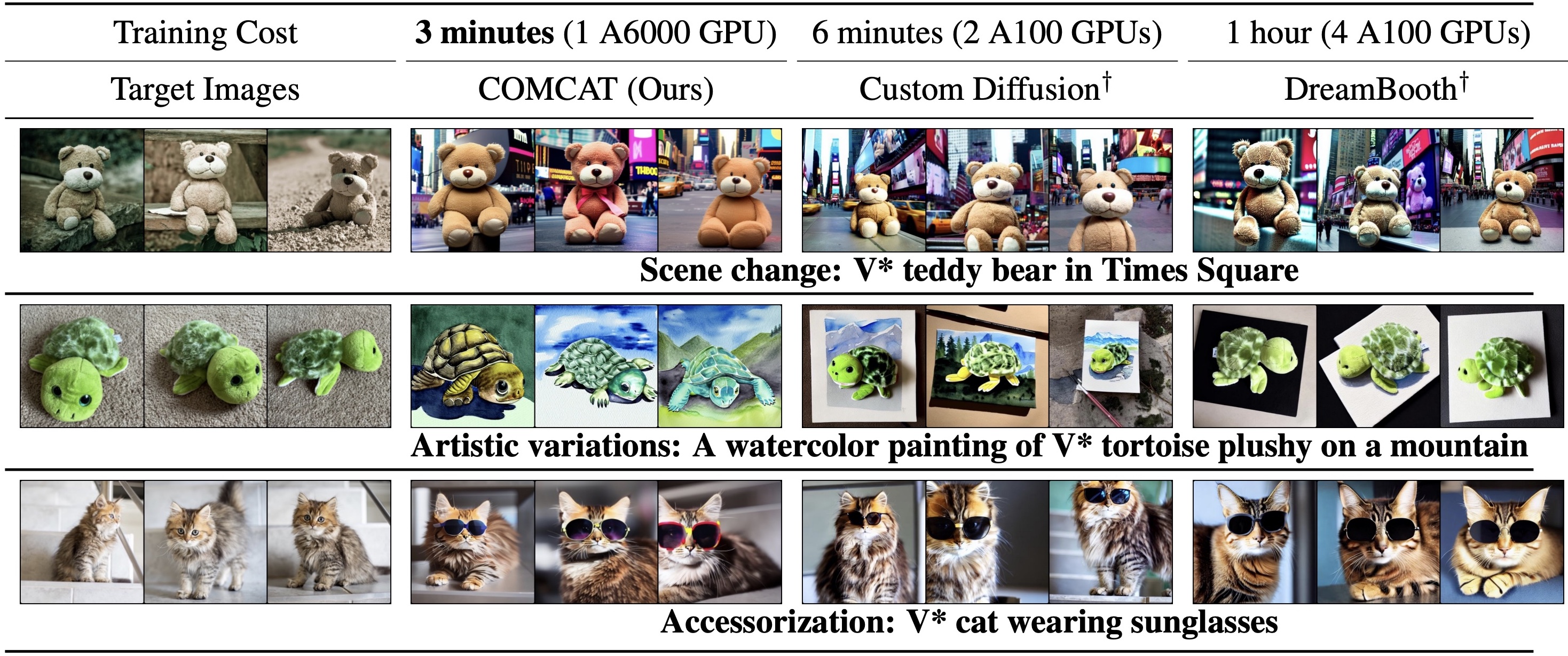

COMCAT: Towards Efficient

Compression and Customization of

Attention-Based Vision Models

|

|

Real-Time Neural Light Field on Mobile Devices

|

|

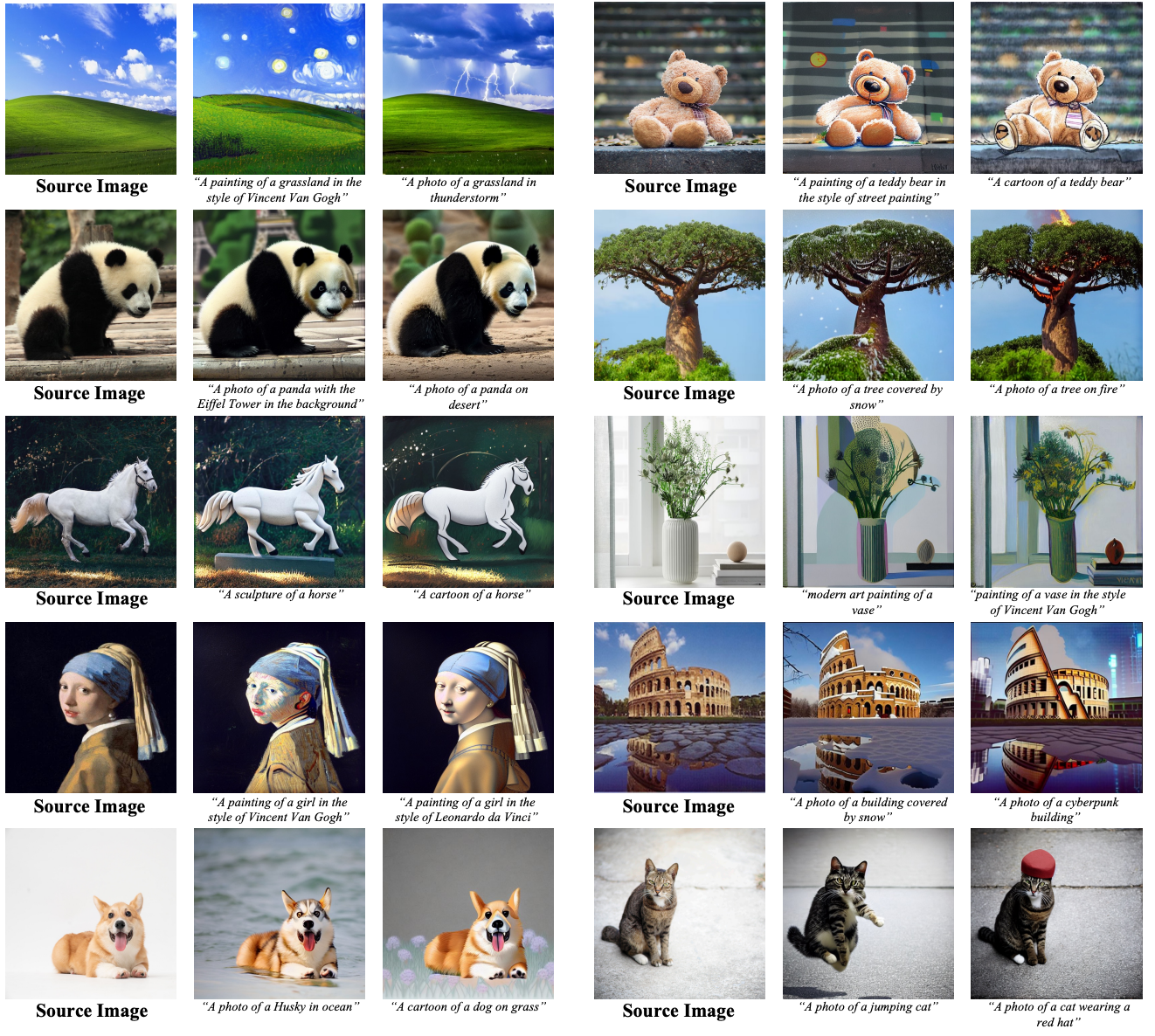

SINE: SINgle Image Editing with Text-to-Image Diffusion Models

|

|

Unsupervised

Volumetric

Animation

|

|

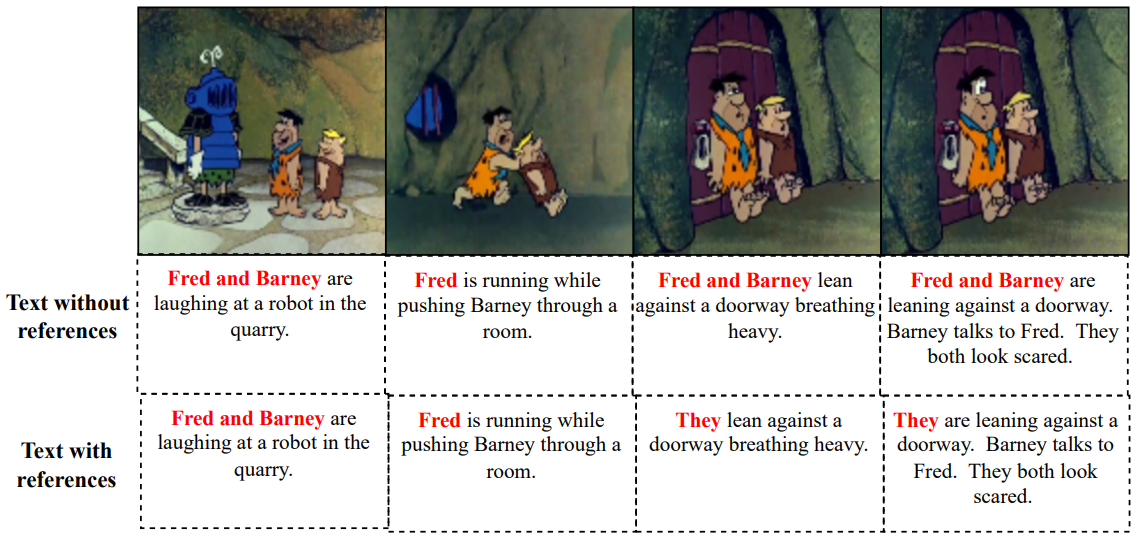

Make-A-Story: Visual Memory

Conditioned Consistent Story Generation

|

|

Invertible Neural Skinning

|

|

3D Generation on ImageNet

|

|

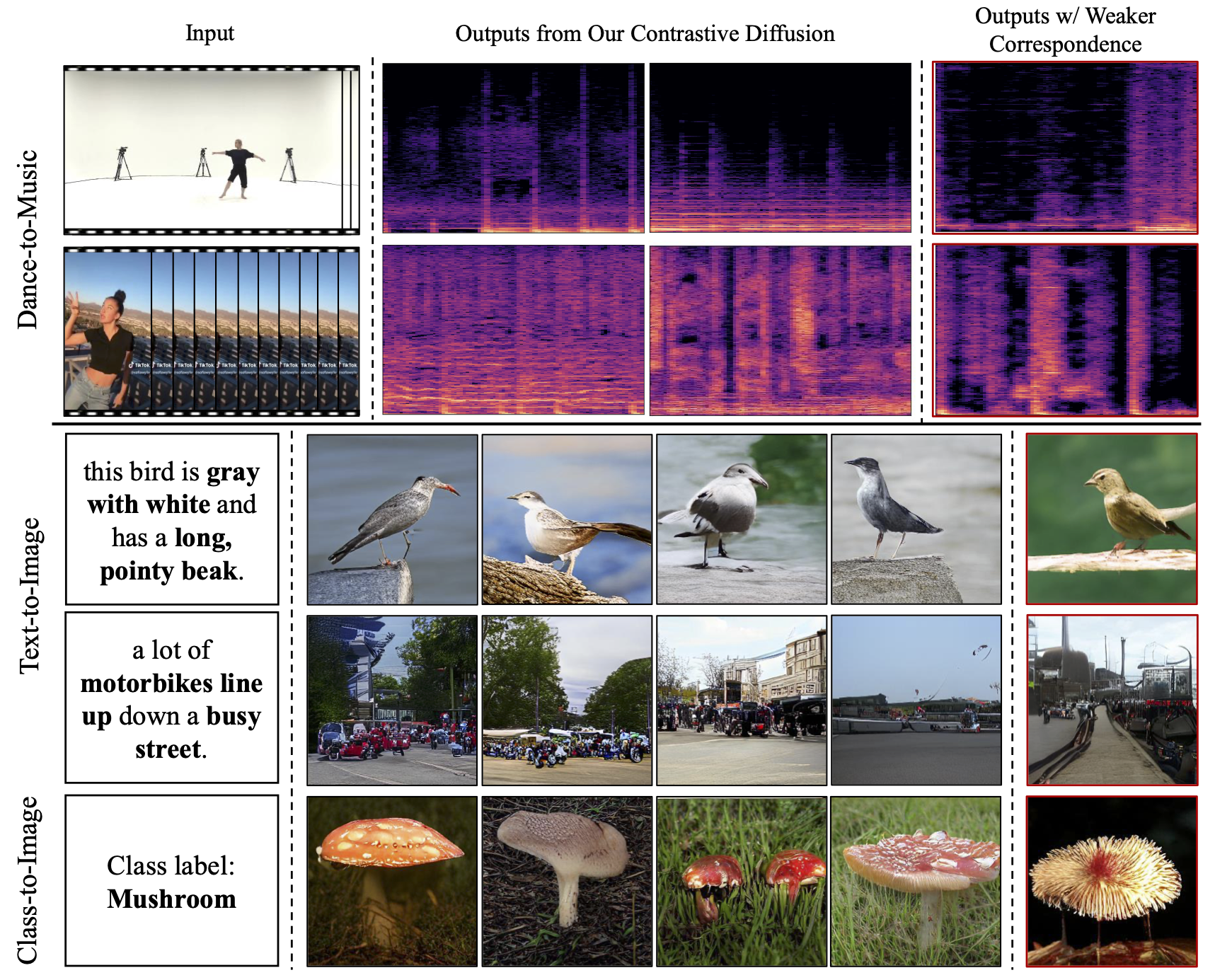

Discrete Contrastive Diffusion for

Cross-Modal and Conditional Generation

|

|

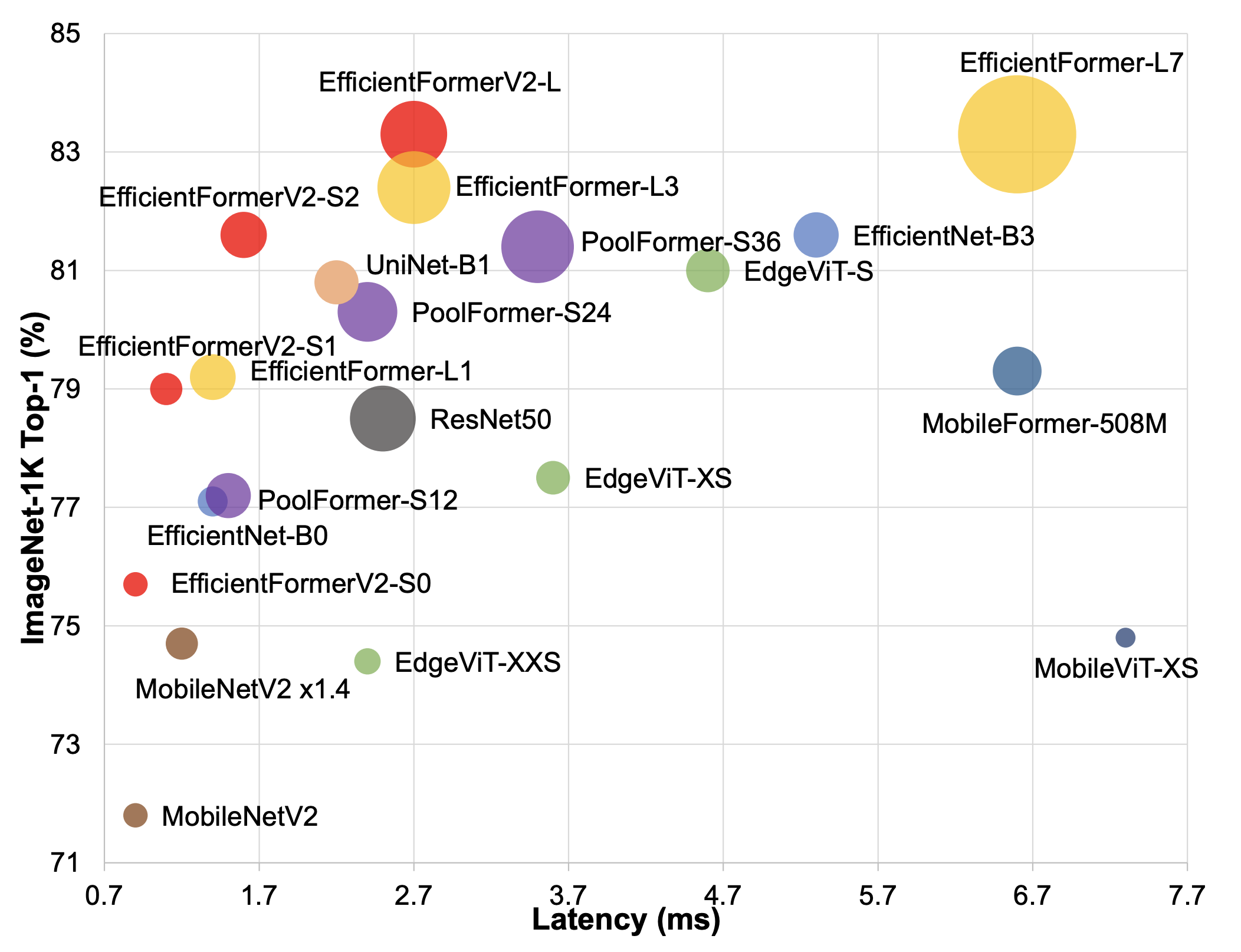

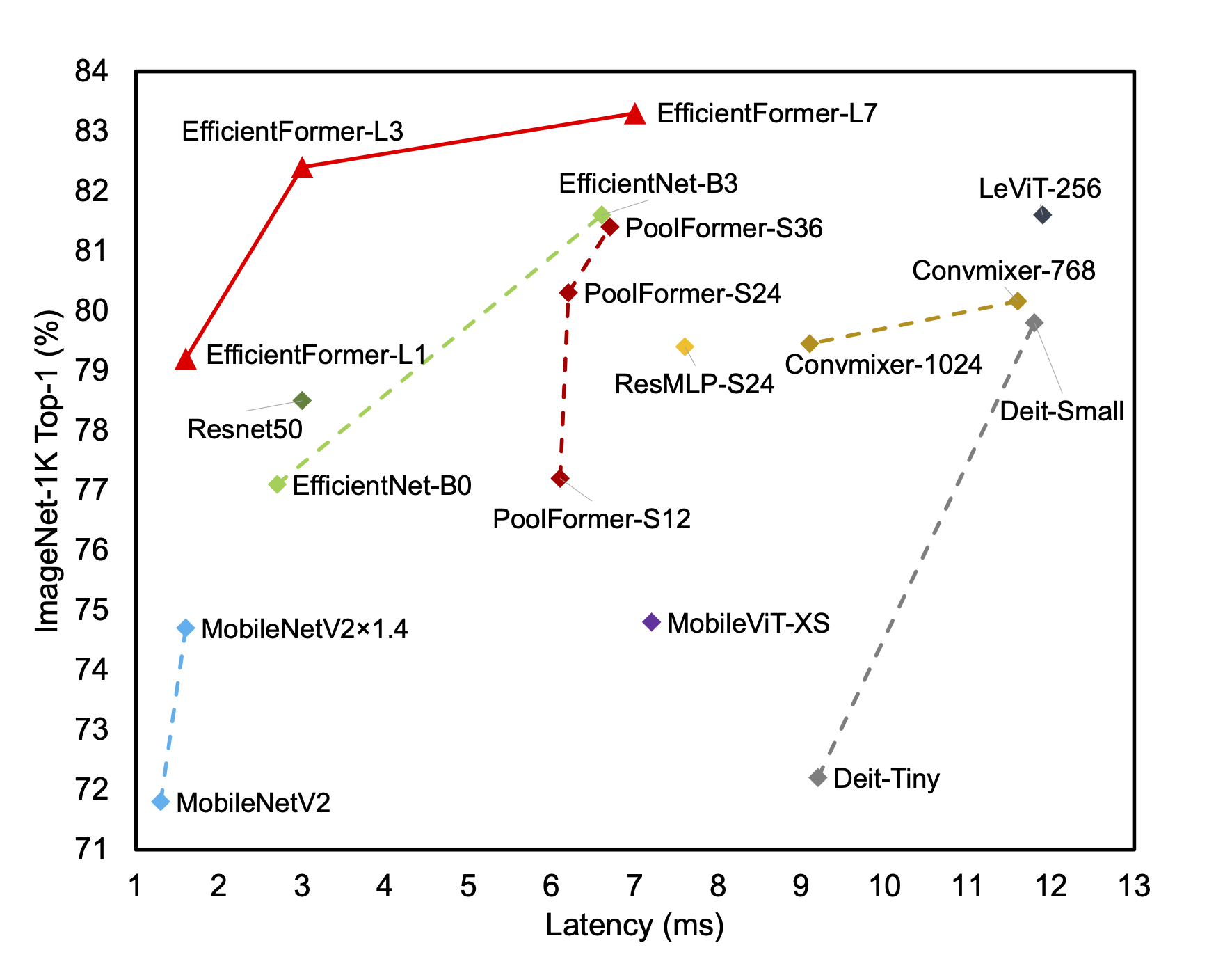

EfficientFormer: Vision

Transformers at

MobileNet Speed

|

|

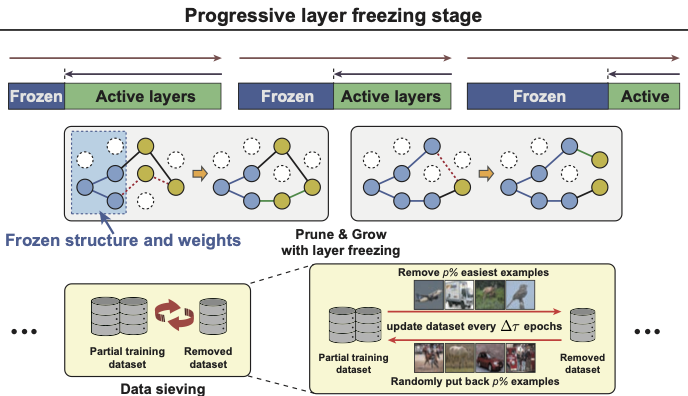

Layer Freezing & Data Sieving: Missing

Pieces

of a

Generic Framework for Sparse Training

|

|

R2L: Distilling Neural Radiance Field to

Neural Light Field for Efficient Novel View Synthesis |

|

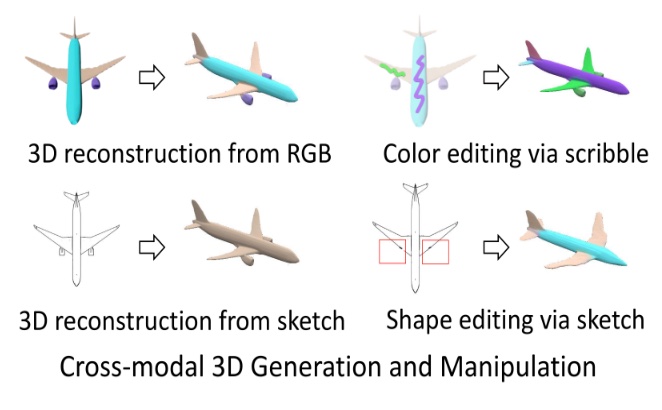

Cross-Modal 3D Shape Generation

and

Manipulation |

|

Show Me What and Tell Me How: Video

Synthesis

via Multimodal Conditioning |

|

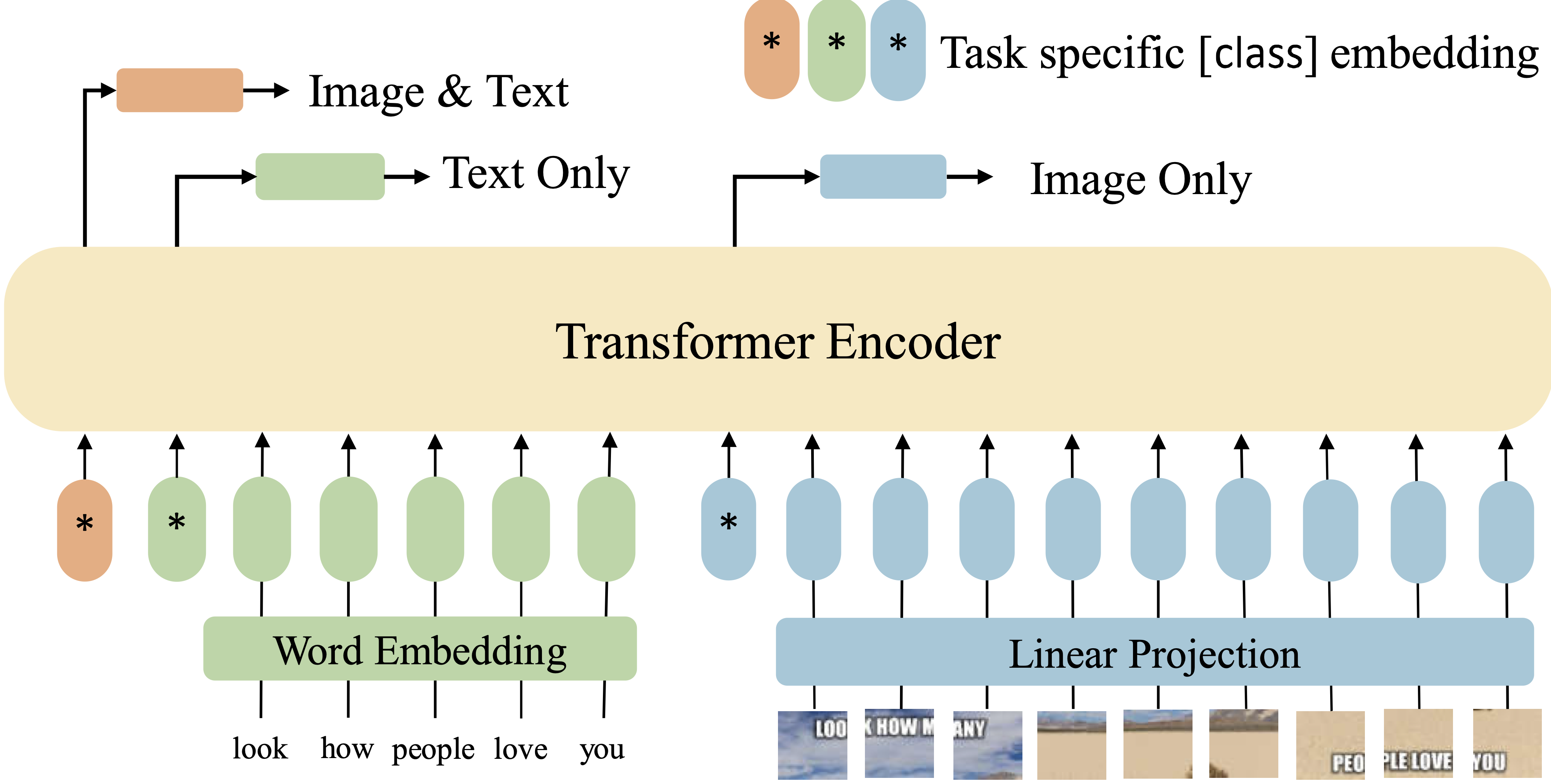

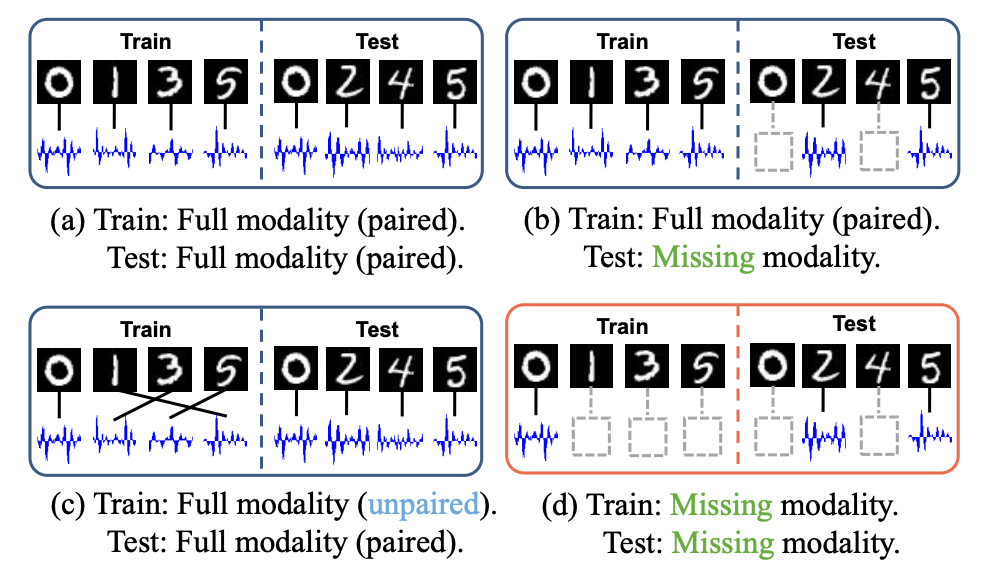

Are Multimodal Transformers Robust to

Missing Modality?

|

|

In&Out: Diverse Image Outpainting via

GAN

Inversion

|

|

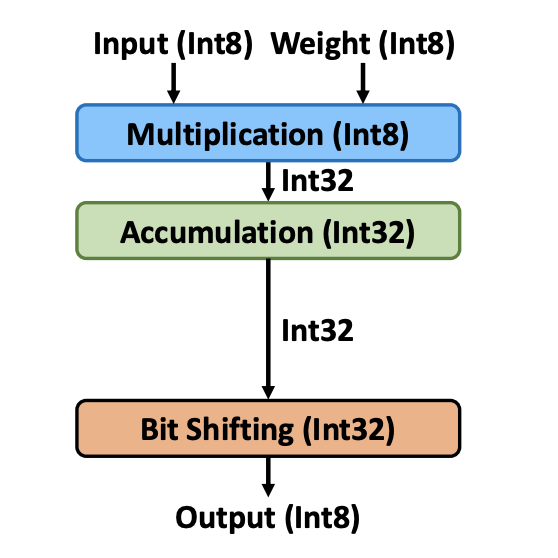

F8Net: Fixed-Point 8-bit Only

Multiplication for Network

Quantization |

|

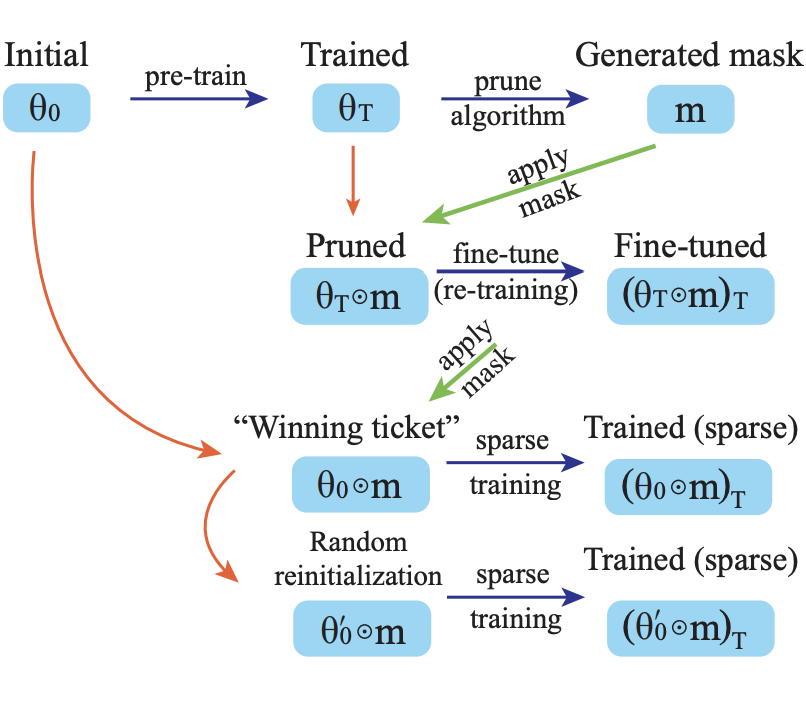

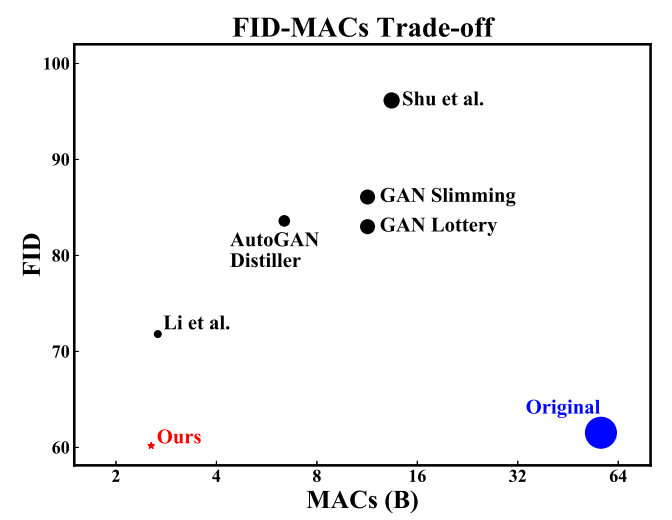

Lottery Ticket Implies Accuracy Degradation, Is

It

a Desirable

Phenomenon? |

|

Flow Guided Transformable Bottleneck Networks

for

Motion Retargeting |

|

Motion Representations

for

Articulated

Animation |

|

Teachers Do More Than Teach: Compressing

Image-to-Image Models |

|

A Good Image Generator Is What You Need

for

High-Resolution Video Synthesis |

|

SMIL: Multimodal learning with severely missing

modality |